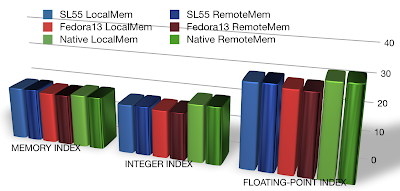

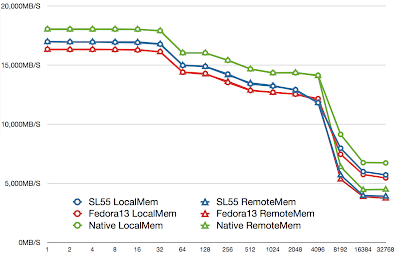

1. In all platforms, accessing local memory is much FASTER than accessing remote memory, so pinning CPU/Memory to VM is mandatory.

2. Native CPU/Memory performance is better than inside VM, but not much.

3. SL55 slightly outperform Fedora13, the newer kernel shouldn't perform worse than the old one, so this point is not understood.

Host Platform: Fedora 13, 2.6.34.7-56.fc13.x86_64

Host Computer: Nehalem E5520, HyperThreading Off, 24GB Memory (12GB each node)

Guest Platform 1: Scientific Linux (RHEL, CentOS) 5.5 64-bit, 2.6.18-194.3.1.el5

Guest Platform 2: Fedora 13 64-bit, 2.6.34.7-56.fc13.x86_64

Test Software:

1. nBench, gives basic CPU benchmark. http://www.tux.org/~mayer/linux/nbench-byte-2.2.3.tar.gz

Command Used: nbench

2. RAMSpeed, gives basic RAM bandwidth. http://www.alasir.com/software/ramspeed/ramsmp-3.5.0.tar.gz

Command Used: ramsmp -b 1 -g 16 -p 1

3. Stream, gives basic RAM bandwidth. http://www.cs.virginia.edu/stream/

Parameter Used: Array size = 40000000, Offset = 0, Total memory required = 915.5 MB. Each test is run 10 times.

Test Scenarios (2 by 3 = 6 Scenarios in total):

3 Platforms: Native, Fedora 13 in VM and SL55 in VM

2 Memory Access patterns per Platform: Local Memory and Remote Memory.

So in total 3*2 = 6 Scenarios.

3 Tests are performed for each of the above 6 scenarios.

Test Methods:

1. For KVM virtual machines, the CPU/Memory pinning are set via the CPUSET kernel functionality. See the other post for details.

2. For Native runs, CPU/Memory pinning are done with numactl. E.g.

Locally run nbench with cpu #0 and only allocate memory in node 0 (where cpu#0 is).

numactl --membind 0 --physcpubind 0 ./nbench

Remotely run nbench with cpu #0 and only allocate memory in node 1 (where cpu#0 is at node 0).

numactl --membind 1 --physcpubind 0 ./nbench

Test Results:

1. nBench:

2. RAMSpeed

3. Stream